Nvidia’s Project Digits is trying to fool everyone

The untold truth about Nvidia's new 'personal supercomputer'

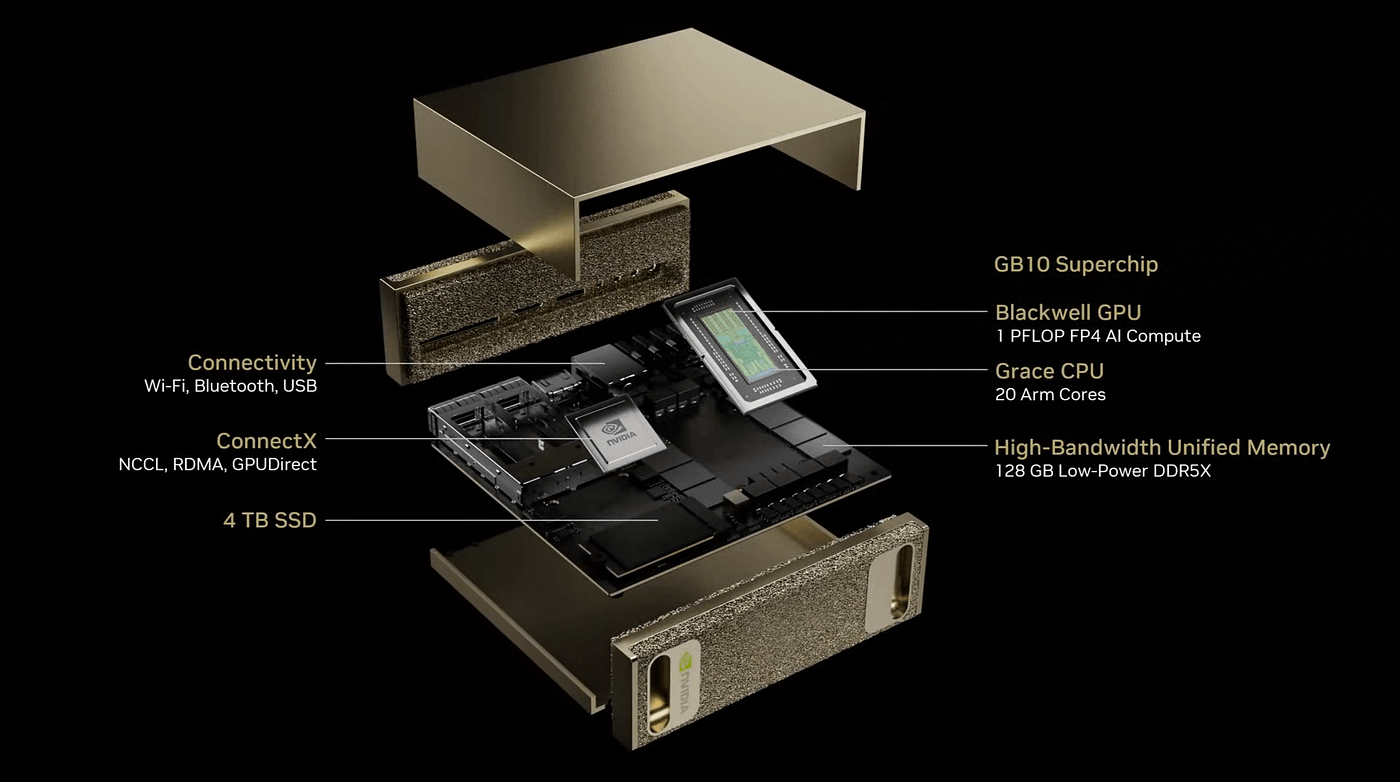

In 2025 CES conference, Nvidia announced its secret new product: the project Digits - “World’s Smallest AI Supercomputer Capable of Running 200B-Parameter Models”.

It has 128GB “Unified Memory” together with a Blackwell GPU. The Blackwell has new GB10 Grace Blackwell Superchip which is a system-on-a-chip (SoC) based on the NVIDIA Grace Blackwell architecture and delivers up to 1 petaflop of AI performance at FP4 precision.

On the surface, this compact powerhouse might seem like a dream machine for those delving into AI research, development, and machine learning. I also excitedly wrote about it. However, as I delve deeper, I can’t help but find that ‘Digits’ is more a product of confusion than clarity. Here are my thoughts:

On surface, the project Digits is very similar to Apple’s Mac Mini. As you can see them from following image. Both are mini size but ‘superpower’ on your desktop.

But as one of the ‘feature’ of Apple’s product:

Lack of Customizability (both hardware and software)

In the world of high-performance computing, workloads can vary dramatically. What works for one AI researcher might not work for another. For example, some users might require more storage, while others might need higher-end GPUs or additional RAM to process larger datasets or train more complex models.

Digits, however, is a sealed box. Once you hit its resource limits, there’s no easy way to upgrade or expand. This rigidity feels at odds with the very nature of AI development, which thrives on scalability and flexibility. For $3,000, users should expect a machine that grows with them — not one that quickly becomes obsolete. (although they mentioned that two Digits can be linked together, but it’s a expensive link?)

Software side, although not sure exactly, it seems Nvidia may put its software suite in it:

“Project DIGITS users can access an extensive library of NVIDIA AI software for experimentation and prototyping, including software development kits, orchestration tools, frameworks and models available in the NVIDIA NGC catalog and on the NVIDIA Developer portal.”

Maybe even its own Operating system? Let’s wait and see as it’s near the launch date.

What’s the untold story?

Nvidia has positioned this product for AI professionals:

“enterprises and researchers can prototype, fine-tune and test models on local Project DIGITS systems running Linux-based NVIDIA DGX OS, and then deploy them seamlessly on NVIDIA DGX Cloud™, accelerated cloud instances or data center infrastructure.” — from Nvidia web page

The reality is that most AI enthusiasts or small-scale developers most likely own or rent a GPU, even more likely Nvidia GPUs. All those things can be accomplished by GPUs already. For newer generation GPU, what motivates them to buy?

It’s “bigger VRAM”. (Currently most consumer GPUs have a maximum of 24GB VRAM. Bigger VRAM enables training and running of bigger parameters AI models)

Nvidia certainly knows about it. Their answer? the project Digits (if you want more VRAM, also get the extra)!

They just don’t want to give you more VRAM to your GPU.

Let’s not be fooled by that!

As the AI (LLM, image/video models) advancing so fast, I think small models can do so much more. Many of us can achieve our goals with a lower-end GPU or PC for a fraction of the price. These cards provide sufficient performance for smaller models and experiments without breaking the bank. If you need more computing resources, I don’t think the Digits’ 128GB VRAM is sufficient.

Thanks for reading! Let me know what you think in the comments!